It was exactly 7 years ago that I first tried a fitness band. I had won a GOQii band at the WPP Stream India event in Jaipur (that I first attended while at Ogilvy) and till then I had no inclination to wear one since I had stopped wearing a watch many years ago before that.

Since then I have moved between multiple fitness bands and have, so far, stuck to the FitBit band.

Now, this Quantified Self movement has been in existence for a very long time. Even something as small as checking our temperature is part of the larger idea. During the peak second wave devastation in India, sales of pulse oxymeters went through the roof. That too is part of this larger movement.

The basic idea is to reduce our bodily functions to a numerical figure, or as quantified by a machine, so that we think we can make sense of them better.

But the quantified-self movement is making much deeper inroads, literally inside, and outside our bodies.

Consider the Canon Posture Fit camera announced at the height of the pandemic in February 2021 when work-from-home seemed like the new default (it continues to be). Much like how a smartwatch detects extended periods of being stationary, the Canon Posture Fit camera, an egg-shaped device placed on your work desk, will track your seating posture and alert you frequently to adjust your seating posture and/or to take a walk.

We used to remember this ourselves, earlier. Now, an external device will be left in charge of tracking, remembering, and informing us of these details.

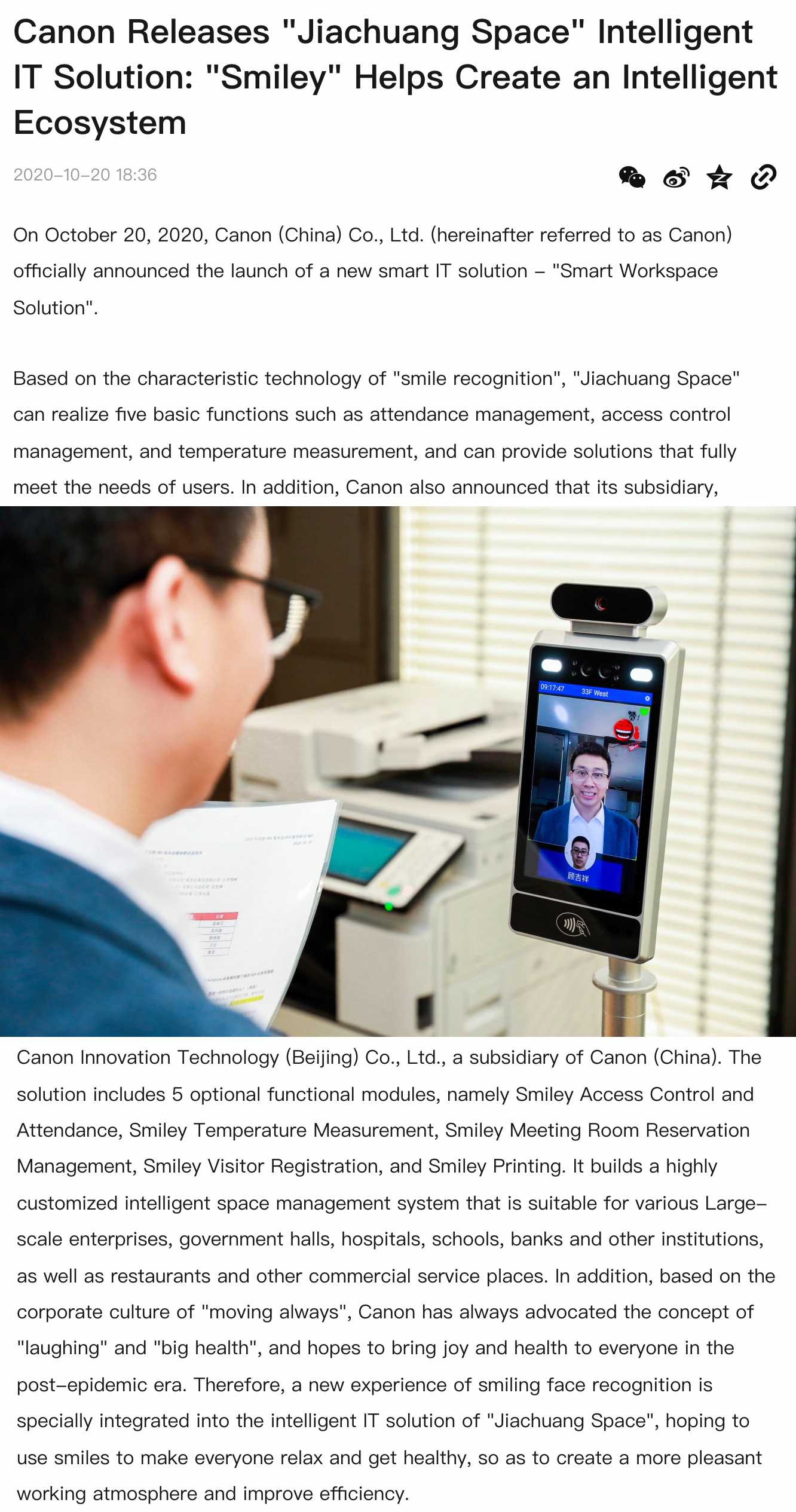

Another Canon device takes matters to a new level – an AI-based camera that tracks employees at work to check if they are ‘smiling’ as a way to ‘promote a positive atmosphere’. I know that sounds straight out of a dystopian sci-fi novel, but this is from Canon in China, so it kind of makes sense.

A UK-based brand called Prevayl is making smart wearable gym clothes that act like ‘second skin so the Sensor can capture the most accurate biometrics, which is transformed into actionable insights’ (according to the brand website).

Gatorade has a ‘sweat patch’! It’s like a band-aid you wear before you start a workout or a run and based on scanning the sweat patch after your workout through Gatorade’s app, it can give you data on your sodium loss and hydration requirements (meaning: sell Gatorade, of course).

Then, things get more interesting with the smart clothes being experimented with by MIT’s Computer Science and Artificial Intelligence Lab. The MIT-designed clothing uses special fibers to sense a person’s movement via touch.

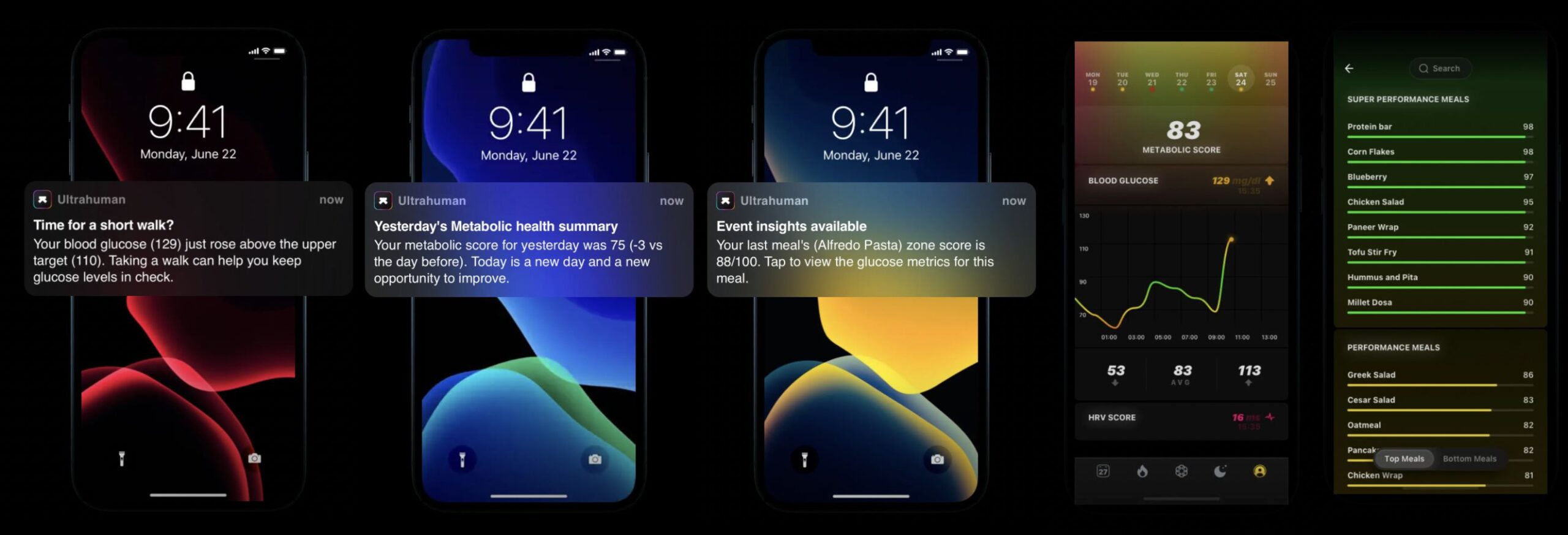

Closer home (meaning: Bengaluru), a company called Ultrahuman is making available patches (tracked by the smartphone app) that can help you monitor the effects of food, drink and exercise on your glucose levels. Blood glucose is the figure that the company tracks and uses as the base to inform data about your own body.

Imagine your blood glucose spiking significantly as you pop in that gulab jamun during Diwali! Would you even continue to crave for one anymore knowing that your blood glucose will spike and topple your numbers?

It’s not very different from seeing a spike in your heart rate (as detected by your smartwatch) when you are angry, upset, shouting at someone/thing, or even watching an exciting cricket match. Your watch will obviously detect the spike in heart rate and alert you, or even warn you to calm down.

In fact, Apple Watch has been credited with such real-life interventions and saving lives as a result. Here’s a story of a man from Indore who was wearing the Apple Watch as it tracked his irregular condition of heartbeats. This led to the family taking that data to a doctor who diagnosed him with conditions that led to an open heart surgery!

And then there are wearables that offer ‘passive data collection’, have no screen (on the wearable itself), and offer data, not in terms of what it tracks, but what the wearable believes should be our outcome… like Whoop. Whoop promises to track only your ‘strain’ and ‘recovery’ (including sleep) levels. This it does by tracking your heart rate continuously and gives you a range to understand it – 0 to 21 for strain, and 0 – 100 for recovery (I don’t know why ’21’)! This is different from the direct data we get from other devices but still is on the same larger ethos – machine-led body understanding.

Going by the advancements in science, I’m reasonably sure that most of us would be equipped with some body-quantifiable device at the very least. This is not science fiction anymore.

What is disconcerting is that we are outsourcing what used to be intuition and instinct to machines. Have we become too busy to read our own instincts? But busy with what? Is it more likely that we have become numb to read those instincts?

It makes me believe that it is of help. For instance, my FitBit gives me a sleep score for every night’s sleep. So, instead of reading my own instincts about a good night’s sleep, I seem to trust the nights when I get an 80+ score to believe that the sleep was good. When did I stop getting attuned to my own inner self to understand how good my sleep was? Was it after getting the FitBit, or before? I have no clue.

It’s a bit like how we don’t make use of our memory – earlier, we used to remember at least a few phone numbers. Now, we completely depend on our phones to remember those numbers and in case of an emergency, we absolutely need a charged phone to bring those numbers back to use!

Or consider how kids hardly have any grasp of even basic multiplication tables, something that was sacrosanct when another generation was growing up. The generation that learned it by heart is able to quickly use their minds to calculate things, while the subsequent generation is using external gadgets to perform those basic calculations.

And going by how auto-correct functions operate in various input devices and tools, one may not necessarily learn the rules of good writing at all anymore. They just need to add the basic outline of what they want to communicate and the software would do the rest. But unless they know what good writing is in the first place, they wouldn’t be able to appreciate or understand the final output! Perhaps the software that did the writing would give a score to reduce that understanding to a number – ‘Your outline was 15% while the final output is 98% of what you wanted to communicate effectively’?

From using machines to help us excel to using machines to simply letting us live… we’re moving in interesting new directions.